[et_pb_section admin_label=”section”]

[et_pb_row admin_label=”row”]

[et_pb_column type=”4_4″][et_pb_text admin_label=”Text”]

Neuromorphic Vision

Neuromorphic Camera

Team Members: Lakshmi A , Sathyaprakash Narayanan

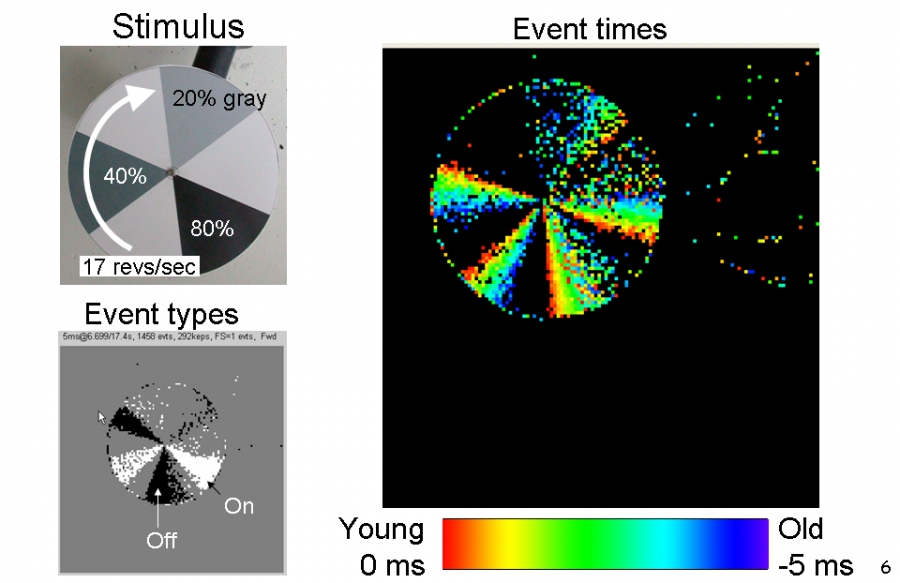

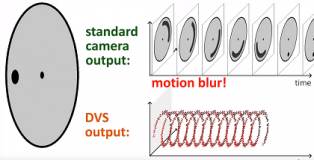

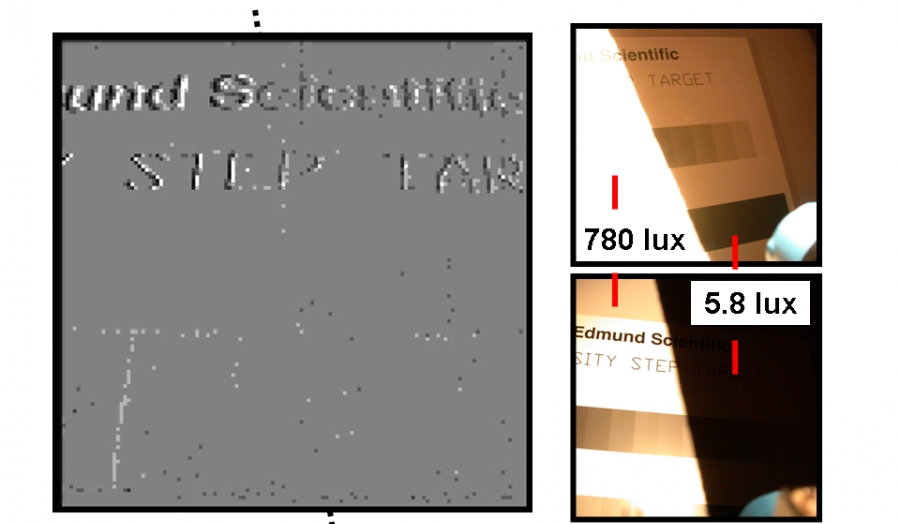

This research work aims to explore the neuromorphic vision sensor processing to enable high speed and low power robotics and defence applications. Regardless of the marvels brought by the conventional frame-based cameras, they have significant drawbacks due to their redundancy in data and temporal latency. This causes problem in applications where low-latency transmission and high-speed processing are mandatory. Proceeding on this line of thought, the neurobiological principles of the biological retina have been adapted to accomplish data sparsity and high dynamic range at the pixel level. These bio-inspired neuromorphic vision sensors alleviate the more serious bottleneck of data redundancy by responding to changes in illumination rather than to illumination itself. Spatio-temporal encoding of event data permits incorporation of time correlation in addition to spatial correlation in vision processing, which enables more robustness. The two most famous silicon retina are Dynamic Vision Sensor (DVS) and Asynchronous Time-based Image Sensor (ATIS).

Figure 1: Illustration of time resolution of event camera

Figure 2: DVS output when viewing a rotating dot

Figure 3: DVS output under wide range of illumination

The vision algorithms for event data should stem from computational aspects of biological vision. Bio-inspired vision algorithms is a developing field which demands merger of biological vision and computer vision. Survey of event vision algorithms can be found in [1].

[1] Lakshmi, Annamalai, Anirban Chakraborty, and Chetan S. Thakur. “Neuromorphic vision: From sensors to event‐based algorithms.” Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery (2019): e1310.

Activity Recognition

Team Members: Sathyaprakash Narayanan

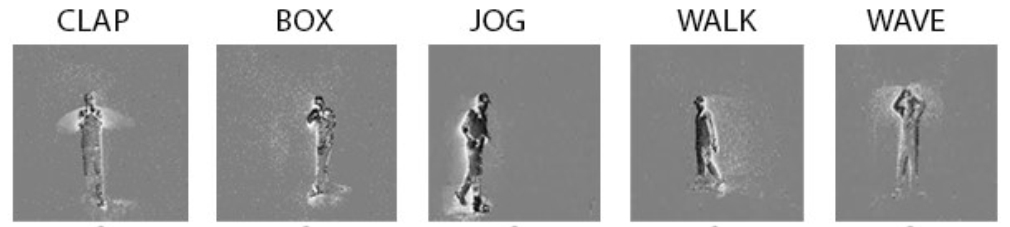

The analysis of activity is understanding motion pattern and in both the event-based and frame-based data activity recognition tasks, the objective is to decode the most relevant motion embedded in the scene and employ machine learning to perform the analytics. The current deep learning models are good at this with frame-based data.

The proposed activity recognition network [2] is as follows: Each input to the model is a set of 40 frames. We extract rich spatial features from each frame in the input by passing them through a 2D convolutional network that was pre-trained on the ImageNet dataset. We used the InceptionV3 model for this feature extraction. The features are the output of the deepest average pooling layer in the Inception V3 model. A softmax classifier at the output was used for activity classification. The different activities are shown in Fig. 4

Figure 4: Five different activities classified using event vision algorithm

[2] Pradhan, B. R., Bethi, Y., Narayanan, S., Chakraborty, A., & Thakur, C. S. (2019, May). N-HAR: A Neuromorphic Event-Based Human Activity Recognition System using Memory Surfaces. In 2019 IEEE International Symposium on Circuits and Systems (ISCAS) (pp. 1-5). IEEE.

Anomaly Detection

Team Members: Lakshmi A

Surveillance is an effective way to ensure security. Hence modelling activity patterns and recognition of peculiar event (anomaly detection) is a critical technology. In the last few years, anomaly detection has been revolutionized by deep learning pipelines that learn representative features to model the distribution of normal class and to measure the deviation from this representation as anomaly score. Anomaly detection on data captured from conventional frame based camera is computationally challenging due to their high dimensional structure. Conventional cameras capture lot of spatially redundant data such as static background, while compromising on temporal resolution. In anomaly detection, the separability between normal and anomaly classes is correlated with the temporal resolution. Dynamic vision sensors alleviate this disadvantage by recording only the pixels which undergo change in intensity, thereby resulting in sparse spatial data and stream of events at microsecond time resolution. The following are the advantages of using neuromorphic cameras for anomaly detection:

• Spatial sparseness of data aids in speeding up anomaly detection

• Higher temporal resolution will contribute to increase in anomaly recognition accuracy

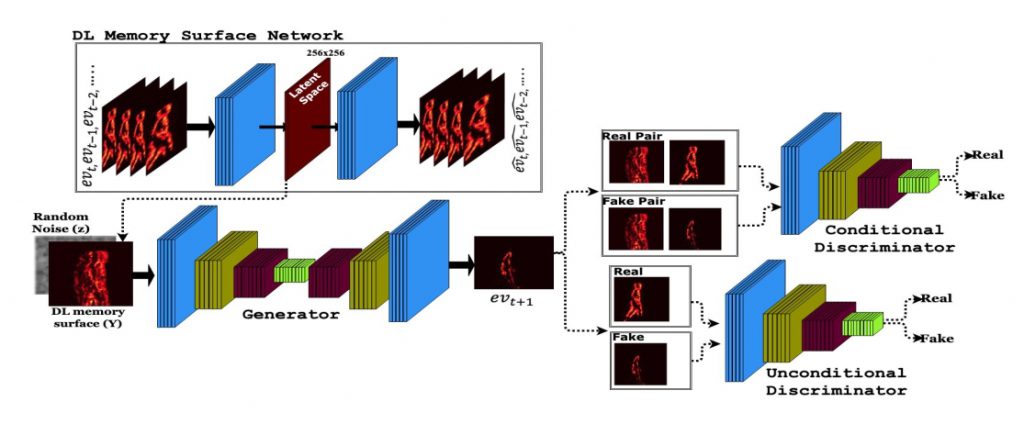

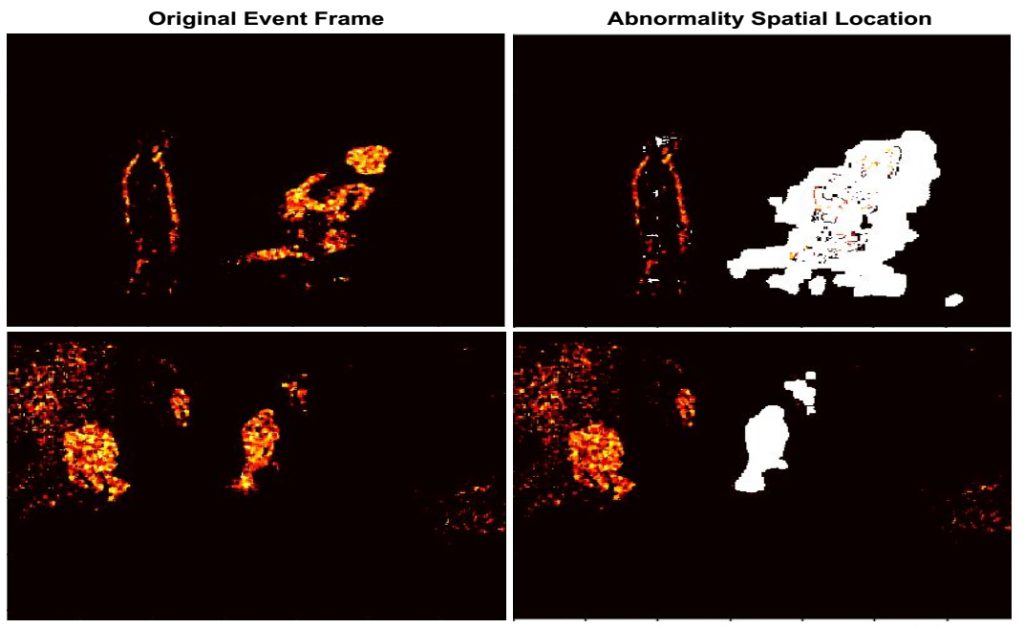

The pipeline of our event data prediction framework for anomaly detection [3] is shown in Fig. 5. In our methodology, we present anomaly detection as a conditional generative problem that predicts future events conditioned on past events. The events which could not be predicted by the network during test time are declared as anomalies. To predict the future events, we train a dual discriminator conditional GAN, one under conditional setting and other under the unconditional setting. As the generator network of GAN is deep, the solution of predicting future events conditioned on the previous events will confront with computationally heavier model. To make the computation faster, we introduce a DL (Deep Learning) memory surface generation network, that tries to capture the coherence of motion from the given a set of events. Figure. 6 provides visualization of abnormality localization.

Figure 5: Framework of anomaly detection network

Figure 6: Anomaly detection results

[3] Annamalai, Lakshmi, Anirban Chakraborty, and Chetan Singh Thakur. “EvAn: Neuromorphic Event-based Anomaly Detection.” arXiv preprint arXiv:1911.09722 (2019).

[/et_pb_text][/et_pb_column]

[/et_pb_row]

[/et_pb_section]