Analog AI Computing

ARYABHAT – Analog Reconfigurable technologY And Bias-scalable Hardware for AI Tasks

Team Members: Pratik Kumar, Ankita Nandi, Shanthanu Chakrabartty, Chetan Singh Thakur

Deep learning accelerators (also called AI accelerators) have the potential to significantly increase the efficiency of deep neural network tasks, for both training and inference. These accelerators are designed for high volume calculations offering dramatically larger amounts of data movement per joule. However, their designs are severely constrained by a fixed power budget and consume a huge area. The power consumption can range anywhere from hundreds of watts to a few megawatts [1]. This is a severe challenge in several design applications and has made it unsustainable to follow the same trend in the near future whereby increasing area, better performance could have been achieved. This has led to a fundamental yet grave hardware bottleneck in the design of digital AI accelerators. However, a silver lining to this cloud can be envisioned in the area of analog hardware accelerators.

The current research has barely scratched the surface of high-performance analog accelerator designs. To date, the power density and performance benefits of analog designs remain unmatched to their digital counterparts. Such analog accelerators can not only achieve similar performance for the much lesser area but are also orders of magnitude energy-efficient. However, designing and scaling such analog compute systems are often very challenging due to non-linear artifacts including bias dependency, mismatch and process technology dependency of design. With the aim of overcoming these analog shortcomings, the objective of this work was to create a truly scalable analog computing framework starting from algorithm to system prototype and demonstrate its feasibility on Machine Learning and Deep Neural Nets.

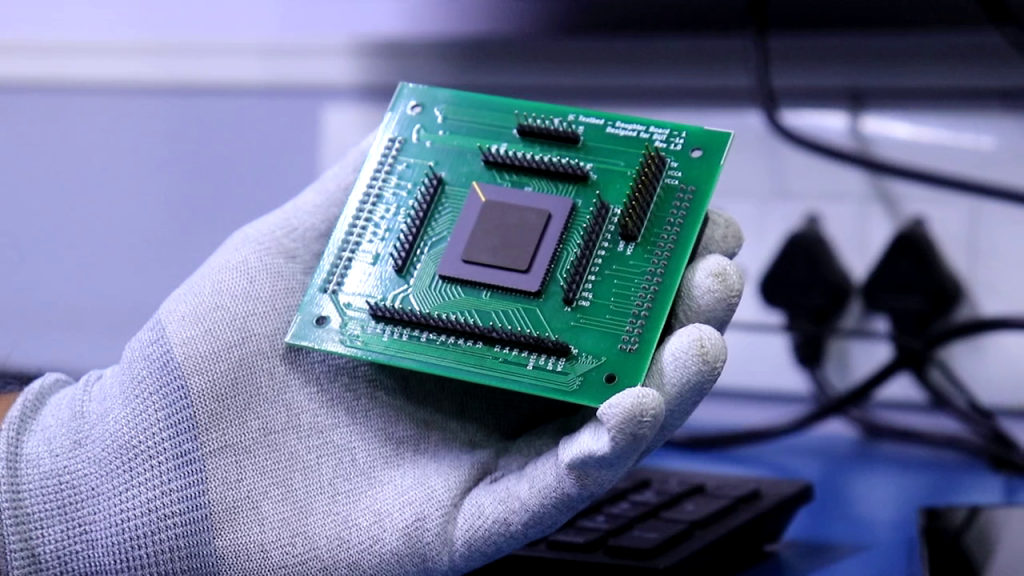

Fig. 1 | ARYABHAT Version.1

This work was started in late 2019 where we started with the development of a novel analog computing framework called Shape-based Analog Computing. This novel framework allowed us to design analog circuits based on approximate shape rather than precise computation. Utilizing this Shape-based Analog Computing, we created robust analog standard cells [2, 3] for widely used computational functions used in standard ML tasks We then utilized these analog standard cells to create high-performance analog computing cores.

Fig. 2 | Demonstration of real time chip testing

- A. Reuther, P. Michaleas, M. Jones, V. Gadepally, S. Samsi, and J. Kepner, “Survey and benchmarking of machine learning accelerators,” in 2019 IEEE high performance extreme computing conference (HPEC), pp. 1–9, IEEE, 2019.

- P. Kumar, A. Nandi, S. Chakrabartty, and C. S. Thakur, “Theory and implementation of process and temperature scalable shape-based cmos analog circuits,” arXiv preprint arXiv:2205.05664, 2022.

- P. Kumar, A. Nandi, S. Chakrabartty, and C. S. Thakur, “CMOS circuits for shape-based analog machine learning,” arXiv preprint arXiv:2202.05022, 2022.