Motivation: Video Cameras capture the scene in 2D and depth information is not captured by them. This limits the use of visual sensors in applications where distance computation is required. This limitation can be overcome by using two cameras as in binocular vision which is also called stereovision. The use of stereo vision has its unique challenge, the first challenge is very apparent that it requires capture at twice the rate from a single camera. The whole video pipeline requires very fast processing and achieving higher frame rate at good resolution is challenging due to sheer amount of computation required. For a HD image (1280 x720, 24 bit image) @30fps the data rate touches 85Mega bytes/sec and if any image processing pipeline has to maintain this frame rate all processing on the image should be over within the time period of 33.33ms. Such a tight time budget is difficult to be achieved by just CPU computations as they don’t allow parallelism and do not take the fact into account that the images are a large matrix and hence end up computing sequentially. The achieved performance is highlighted below:

| Key Specification | Details |

| Pipeline Architecture | High performance gstreamer hardware accelerated pipeline |

| API for capture | NVIDIA Argus |

| Input Streams | Two HD streams Live each of : 1280 x720 (RGB) |

| Deep Learning Integration | CAFFE(Convolutional Architecture for Fast Feature Embedding.) |

| Disparity Computation | GPU accelerated using CUDA and Opencv2 |

| Performance | In the depth computation application these limitations are overcome by using GPU for Image |

In the depth computation application these limitations are overcome by using GPU for Image

processing and is discussed in the subsequent sections:Application details: The Jetson Nano board uses GPU with NVIDIA Maxwell architecture

with 128 NVIDIA CUDA cores. The CPU consists of quad-core ARM Cortex -A57 MPCore

processor.

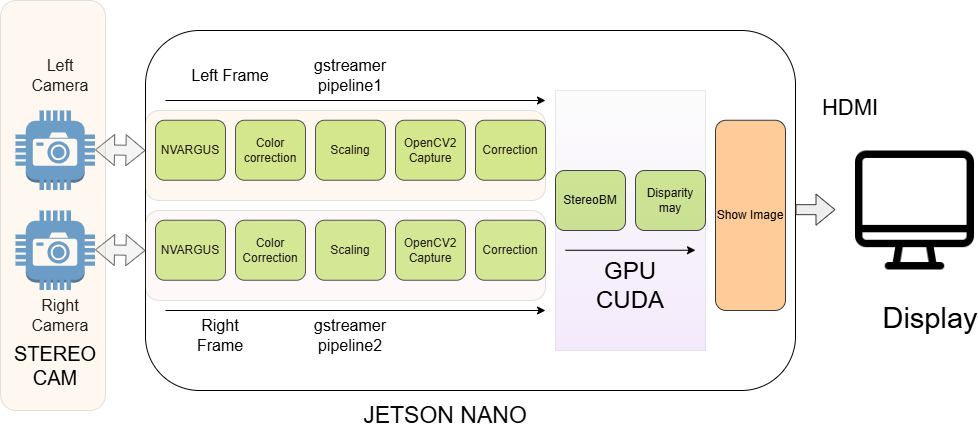

Figure :1 Functional Block Diagram and Video Processing Pipeline

The left and right images are captured in two different threads using the gstreamer pipeline.

1. Rectification: The image needs to be corrected using the precomputed camera parameters which were calculated using Camera Calibration. At end of this step the

images are corrected for lens distortion and is read for stereo block matching

2. Gaussian Filtering: This step is required to reduce the noise form the image

3. Stereo Block matching: The algorithm divides the left and right images into small, rectangular blocks of pixels. For each block in the left image, it searches for a matching block in the right image. The search is constrained to the same horizontal line, as the primary difference between the images is a horizontal shift.

4. Displaying Disparity map: After the block matching algorithm searches and finds disparity among the known pixels it is shown using a color map. Where higher disparity is shown as color red and hence are closer. The hues of blue denote objects which are further. This is shown in the Figure 2.

Recent Comments