Aim:

This project focuses on the development of a face tracking camera system using the ESP32-CAM

microcontroller and the MG995 servo motor. The primary objective of the system is to accurately track the

movement of a person’s face in real-time and adjust the camera’s position accordingly. Face detection is

accomplished using the MobilenetV2 algorithm, which enables the identification and localization of faces

within the camera’s field of view. The detected face coordinates are then used to control the servo motors,

allowing the camera to smoothly track and follow the person’s movements. By integrating face detection

and servo motor control, this project demonstrates an effective solution for automatic face tracking in

various applications, such as surveillance systems and human-computer interaction interfaces.

Implementation Process:

1. Hardware Setup:

•Connect the ESP32-CAM microcontroller and the MG995 servo motor.

•Ensure proper power supply and wiring connections.

•Mount the camera and servo motor in a suitable configuration.

2. Servo Motor Control:

•Write the code to control the servo motor using PWM (Pulse Width Modulation) signals.

•Use appropriate libraries or APIs to interface with the servo motor.

•Implement functions to rotate the servo motor to specific angles based on input signals.

3. AI-based Face Detection Algorithm Implementation:

•Utilize the MobilenetV2 algorithm for face detection.

•Integrate the algorithm into the ESP32-CAM microcontroller.

•Configure the algorithm to process the camera feed and identify faces.

4. Integration of Face Detection with Servo Control using Proportional Controller:

•Extract the coordinates of the detected face from the face detection algorithm.

•Implement a proportional controller to map the face coordinates to servo motor control signals.

•Calculate the appropriate servo motor movement based on the deviation between the detected

face position and the desired position.

•Adjust the servo motor position in real-time to align the camera with the person’s face.

Hardware Setup:

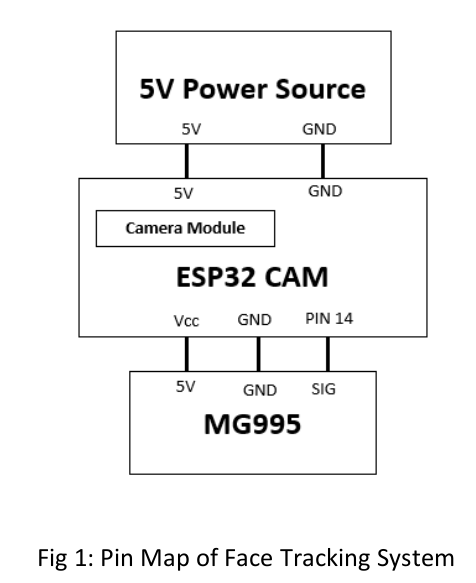

The hardware setup for the face tracking camera system involved several key components. Firstly, the ESP32-

CAM microcontroller served as the central control unit. It was powered by providing a 5V power supply to

the ESP32-CAM module. Secondly, the servo motor, specifically the MG995, was connected to the ESP32-CAM.

The servo motor’s Vcc (5V) output pin was connected to the appropriate power source on the ESP32-

CAM, ensuring sufficient power supply. The signal pin of the servo motor was connected to pin 14 of the

ESP32-CAM, which served as the PWM output pin. This connection allowed the ESP32-CAM to send precise

control signals to the servo motor for accurate movement. Also, the integrated camera on the ESP32-CAM

module is connected using the Serial Camera Control Bus (SCCB) interface. Lastly, to ensure a common

ground for the entire system, the ESP32-CAM ground pin was utilized. This facilitated proper communication

and electrical stability among the ESP32-CAM, servo motor, and other components.

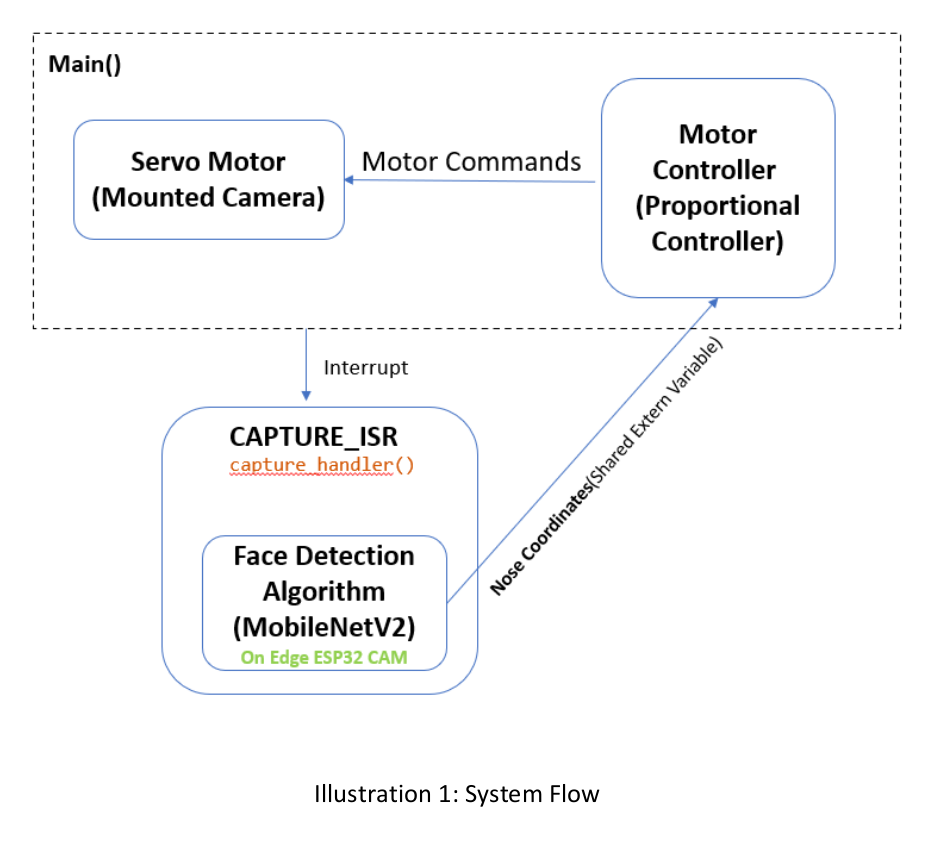

Flow Diagram:

Below Shown is the process flow diagram of our system.

Servo Motor Control:

In the face tracking camera system, the servo motor was utilized to control the movement of the camera.

Servo motor control was achieved by writing specific angles directly to the motor commands. The motor was

programmed to rotate in a manner that allowed it to track the movement of a human face within its visibility

area. By continuously monitoring the detected face coordinates, the servo motor adjusted its position to

align the camera with the face. This dynamic movement ensured that the camera maintained focus on the

person’s face, allowing for accurate and responsive face tracking. The servo motor played a crucial role in

providing smooth and precise adjustments to the camera’s position, enabling the system to effectively track

the movement of the human face.

During the implementation of the servo motor, the team initially considered a static camera case, where the

motor angles were directly mapped to the x-coordinates of the nose. However, this approach proved to be

insufficient in the moving camera case. In order to adapt to a camera that was constantly in motion, a

controller, specifically a P-Controller, had to be introduced. The P-Controller allowed for a more dynamic

and responsive control of the servo motor, taking into account the changing frame of reference caused by

the camera’s movement. By incorporating the P-Controller, the system was able to accurately track the face

even when the camera was in motion, overcoming the challenges posed by a moving camera scenario.

Face Detection Algorithm:

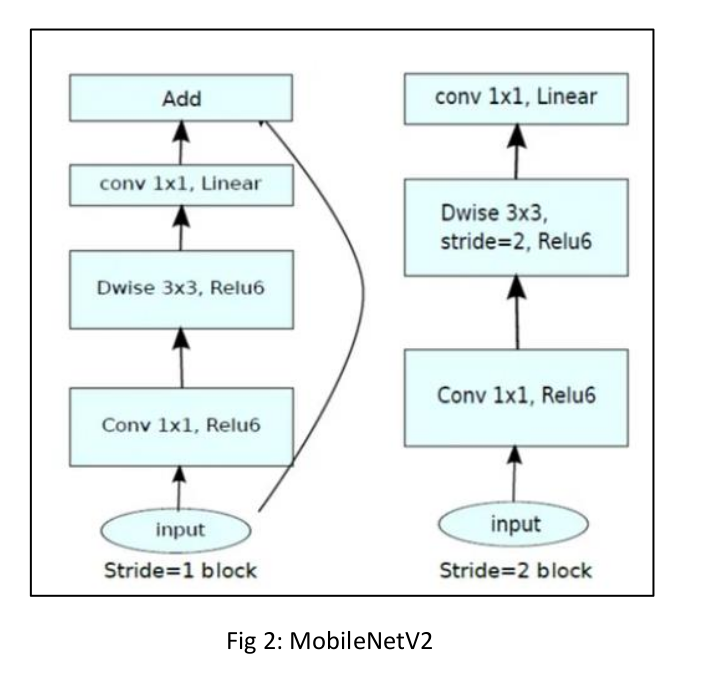

The implementation of the Face Detection Algorithm on the ESP32-CAM involved utilizing the MobileNetV2

architecture. MobileNetV2 is a widely used lightweight convolutional neural network (CNN) architecture

known for its efficiency and effectiveness in computer vision tasks, including face detection. Designed with

a focus on being lightweight and efficient, MobileNetV2 is well-suited for deployment on edge devices such

as smartphones and embedded systems like the ESP32-CAM. By leveraging the power of MobileNetV2, the

ESP32-CAM was able to efficiently process the camera feed and perform real-time face detection. The

algorithm’s lightweight nature made it suitable for running on the resource-constrained ESP32-CAM,

enabling accurate and efficient face detection capabilities within the embedded system.

Use of Proportional Controller to control servo angles:

What is Proportional Controller?

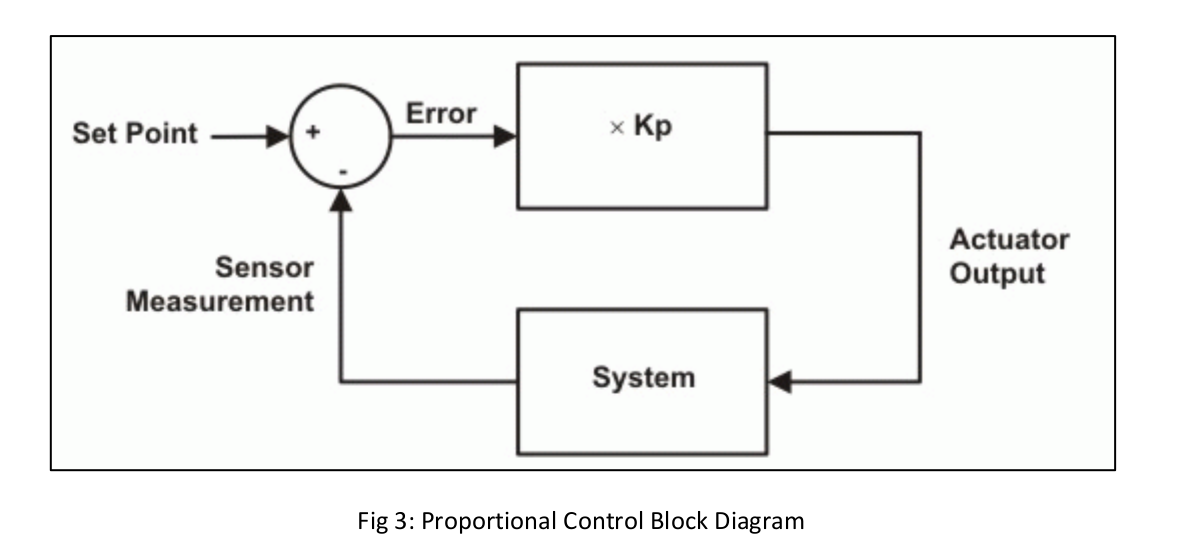

A Proportional Controller, often referred to as a P-Controller, is a type of feedback control mechanism

commonly used in control systems. It operates based on the proportional relationship between the error

(the difference between the desired setpoint and the actual value) and the control action. The P-Controller

calculates the control output by multiplying the error with a constant gain factor known as the proportional

gain (Kp).

The P-Controller works by continuously monitoring the error and applying a control signal proportional to

the error value. The control signal aims to minimize the error and bring the system closer to the desired

setpoint. The greater the error, the larger the control signal applied. The proportional gain determines the

sensitivity of the control system to the error. A higher gain amplifies the control signal, resulting in a faster

response but potentially leading to overshooting or instability. On the other hand, a lower gain may result

in a slower response but with better stability.

The main advantage of a P-Controller is its simplicity and ease of implementation. It is often used in systems

where the desired control response can be achieved by directly adjusting the control output proportionally

to the error. However, the P-Controller may struggle to eliminate steady-state errors and may exhibit

overshooting or undershooting behaviour in response to sudden changes. In more complex control

scenarios, additional control mechanisms such as integral and derivative control (PI or PID control) are often

combined with the P-Controller to improve overall system performance.

Implementation to provide servo angles:

1. A proportional controller was employed to track the movement of the face. At each instant, the error

was calculated by comparing the position of the detected face (specifically the x-coordinate of the

nose) with the desired position.

2. The angle to be written to the servo motor was determined by adding the delta angle (change in

angle) to the previous state angle. This allowed for smooth and continuous servo movement.

3. The error was calculated using the formula: Error = (X_res/2) – X_nose, where X_res represents the

width of the frame (e.g., 240 pixels for a 240×176 frame size).

4. The delta angle was obtained by multiplying the proportional gain (Kp) with the error: Delta Angle =

Kp * error. The proportional gain determined the responsiveness and sensitivity of the system to the

error.

5. To ensure that the angle passed to the servo motor was an absolute angle, the delta angle obtained

was added to the previous state angle. This addition accounted for the current position of the servo

and allowed for accurate positioning.

By utilizing the proportional controller in this manner, the system was able to dynamically adjust the position

of the servo motor based on the detected face’s movement. The proportional gain and error calculation

enabled precise tracking and responsive servo control, ensuring that the camera remained focused on the

face throughout the movement.

Conclusion:

In conclusion, the face tracking camera project utilizing the ESP32-CAM and MG995 servo motor successfully

achieved its objectives. By implementing a lightweight and efficient face detection algorithm using

MobileNetV2, the system was able to detect and track faces in real-time. The integration of the servo motor

with a proportional controller allowed the camera to smoothly follow the movement of the detected face,

ensuring accurate tracking. The project overcame challenges related to a moving camera case by introducing

a P-Controller, which enabled dynamic adjustments to account for the changing frame of reference. The

combination of hardware setup, servo motor control, and face detection algorithm implementation resulted

in a robust face tracking camera system. The project showcased the feasibility of utilizing embedded systems

for real-time computer vision applications and demonstrated the potential for further enhancements and

applications in the field of human-computer interaction and surveillance systems.

Future Scope:

1. Implementation of a second servo: Expanding the project by incorporating a second servo motor to

capture face movement in the y-direction. This would enable the camera to track not only horizontal

movement but also vertical movement, providing more comprehensive face tracking capabilities.

2. Optimization of face detection algorithm: Exploring methods to optimize the input and processing of

the face detection algorithm (MobileNetV2) for faster and more efficient performance. This could

involve techniques such as model quantization, pruning, or using hardware accelerators to speed up

the algorithm’s execution.

3. Incorporation of additional tracking parameters: Enhancing the tracking system by incorporating

parameters such as the maximum speed at which a human can move. This would make the tracking

more robust and adaptive to various scenarios, ensuring real-time and accurate tracking even in

dynamic environments.

4. Training the face detection model with noisy data: Improving the robustness of the face detection

model by incorporating noisy training data. This could include data with variations in lighting

conditions, occlusion, or other challenging scenarios. By training the model with such diverse data, it

would become more reliable and effective in detecting faces under different environmental

conditions.

code: Code

Demo:

Face Movement with Camera Tracking view

Camera Feed On Browser View

Recent Comments