ABSTRACT

The main aim of the project is to develop and setup environment inside an embedded system to use machine learning for detection of objects and control and toy car autonomously. The main elements of the project are choosing appropriate sensor, embedded platform and then the motor driving system and chassis to run desired machine vision and machine learning algorithm. For the purpose of this experiment I have chosen Jetson nano as platform due to its support provided for running Machine vision algorithm on the GPU. The sensor selected is a stereo vision sensor which has an inbuilt IMU sensor and hence is not just capable of carrying out vision related work but can also perform depth estimation. The real timeness in the project is provided by the Graphical processing System.

I INTRODUCTION

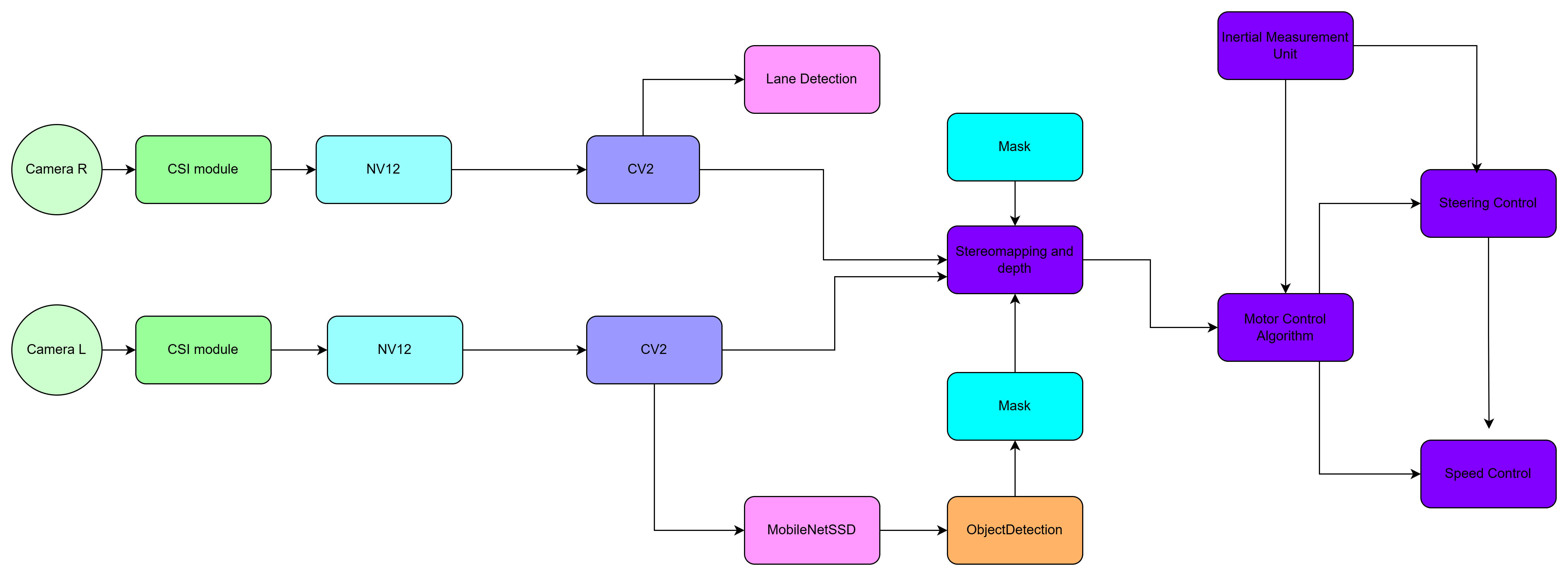

The autonomous toy car project utilizing the NVIDIA Jetson Nano represents a significant advancement in robotics and artificial intelligence. This compact yet powerful single-board computer is equipped to handle complex tasks such as lane detection, depth estimation, and object detection, making it an ideal choice for developing smart, self-driving vehicles. The system block diagram is represented in Fig 1

Figure 1: Block Diagram of the perceived system

The key features of the project are explained one by one here

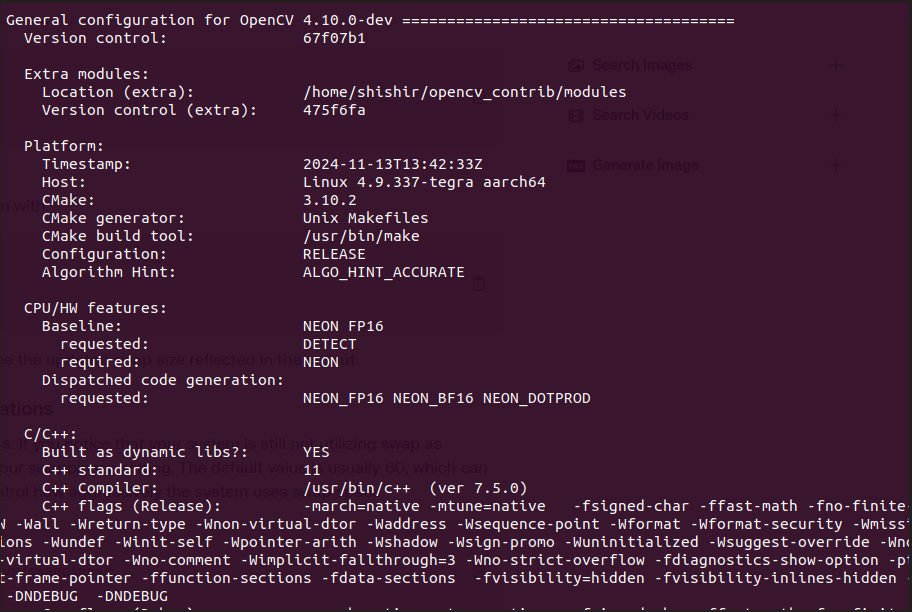

Figure 2: Showing full hardware support after successful installation

1.0.1 Lane Detection

The car employs advanced algorithms for lane detection, which is crucial for maintaining its position on the road. Utilizing a stereo camera setup, the system can accurately identify lane markings even under challenging conditions like poor lighting or adverse weather. The implementation often involves convolutional neural networks (CNNs) that process images in real-time, allowing the vehicle to navigate effectively by recognizing and adhering to lane boundaries.

1.0.2 Depth Estimation

Depth estimation is achieved through the stereo camera configuration, which captures two slightly offset images. By analyzing the disparity between these images, the system can gauge distances to obstacles and other objects in its environment. This capability enhances the vehicle’s awareness of its surroundings, enabling safer navigation and obstacle avoidance.

1.0.3 Object Detection

For object detection, the project leverages the MobileNet-SSD (Single Shot MultiBox Detector) model. This lightweight yet efficient neural network is designed for real-time object recognition, allowing the toy car to identify various objects such as pedestrians, vehicles, and road signs. The integration of MobileNet-SSD with the Jetson Nano facilitates rapid processing of video feeds, ensuring that the car can respond promptly to dynamic environments

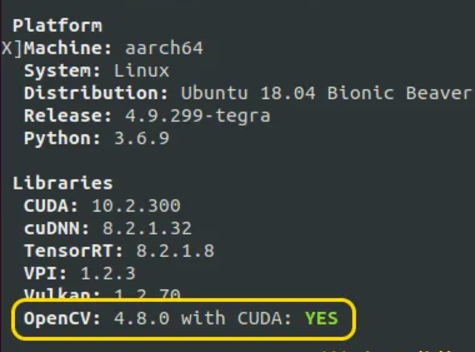

Figure 3: Successful installation of OpenCV

II JETSON NANO HARDWARE ARCHITECTURE :

The Jetson Nano’s architecture includes several features that enhance its multi-camera capabilities: 2.0.1

Hardware:

With a Quad-core ARM A57 CPU and a 128-core Maxwell GPU, the Jetson Nano can process multiple video streams efficiently, making it suitable for complex image analysis tasks. Flexible Connectivity: The board supports various interfaces such as USB 3.0 and GPIO pins, allowing integration with different types of cameras and sensors. Some key details are mentioned here. Quad-core ARM Cortex-A57 Clock Speed: Operates at 1.43 GHz Architecture: 64-bit GPU

Graphics Processor: NVIDIA Maxwell architecture (GM20B)

CUDA Cores: 128

cores Performance:Capable of delivering up to 472 GFLOPs (FP16) performance. GPU Clock Speed: The base frequency is 640 MHz, with a boost capability up to 921 MHz.

Memory Bandwidth: The GPU is paired with 4 GB of LPDDR4 memory, offering a bandwidth of 25.6 GB/s through a 64-bit memory interface. Memory and Storage System Memory: 4 GB LPDDR4 RAM

Storage: MicroSD card slot for external storage Connectivity and Interfaces Camera Interfaces: Supports up to four MIPI CSI-2 camera connections, allowing for multiple camera setups.

USB Ports: Four USB 3.0 ports and one USB 2.0 Micro-B port for power or device mode.

Video Output: HDMI 2.0 and eDP 1.4 for video output.

Networking: Gigabit Ethernet (RJ45) connectivity.

Dimensions Size: The module measures 70 mm x 45 mm, making it compact enough for integration into various devices.

2.0.2

Software Stack:

Ubuntu 18.04 LTS

Ubuntu 18.04 provides a stable environment for the Jetson Nano, ensuring compatibility with various libraries and frameworks essential for AI development. However, maintaining this version can be challenging due to the dependency on specific software versions that may not be supported in newer iterations.

Python 3.6.9

Python 3.6.9 is widely used in AI and machine learning projects due to its extensive libraries and community support. The choice of this version is crucial since many libraries may not yet support newer Python versions, which can lead to compatibility issues.

OpenCV 4.10 with CUDA Support

OpenCV (Open Source Computer Vision Library) is integral for real-time computer vision applications. Version 4.10, with CUDA support, allows the Jetson Nano to utilize its GPU for accelerated processing of images and videos, significantly improving performance over CPU-only operations. Challenges in Version Management One of the primary challenges when working with this software stack is ensuring that all components are compatible with each other. Newer versions of libraries or dependencies may introduce breaking changes or lack support for CUDA on the Jetson Nano, which can lead to installation failures or runtime

errors. I had to revert to older versions or seek workarounds to maintain functionality.

Jet Pack

JetPack 4.6.5 is a production-quality release that serves as a minor update to the previous version, JetPack 4.6.4. This version includes several enhancements and security fixes, making it essential for developers looking to build robust applications on NVIDIA’s embedded platforms.

L4T

L4T 32.7.5 L4T (Linux for Tegra) 32.7.5 is the underlying Linux operating system that accompanies JetPack 4.6.5. It provides the necessary drivers and kernel support for NVIDIA’s hardware

III IMPLEMENTATION OF THE STACK :

Building the Cv2 requires compiling of the source code with specific support enabled the details of this can be found in https://qengineering.eu/install-opencv-on-jetson-nano.html. If followed correctly this will lead

to opencv2 installation with CUDA support. This whole step takes 6 hours, jtop if installed correctly will result in confirmation as in figure 2 and 3

Installation of Caffe Framework;

Its difficult to maintain proper installations of tensor flow lit but caffe provides a better frame work for deploying machine learning models for object detection.

Here you can find the link https://qengineering.eu/ install-caffe-on-jetson-nano.html. Caffe installation do not come with pretrained models a model with .cafemodel can be downloaded and put in appropriate folders and accessed inside the python framework. Pycaffe is the tool providing the necessary bindings. This steps again take 4-5 hours and is again crucial.

IV RESULTS AND L ESSON LEARNED :

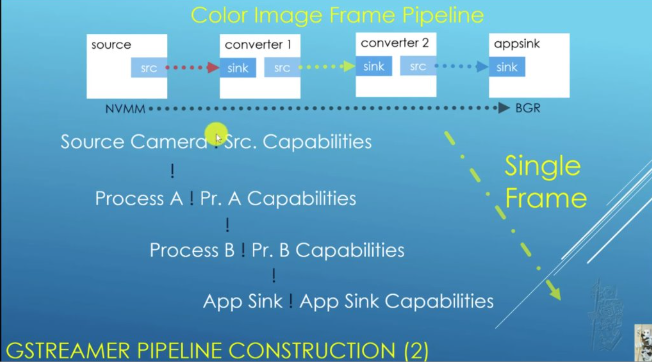

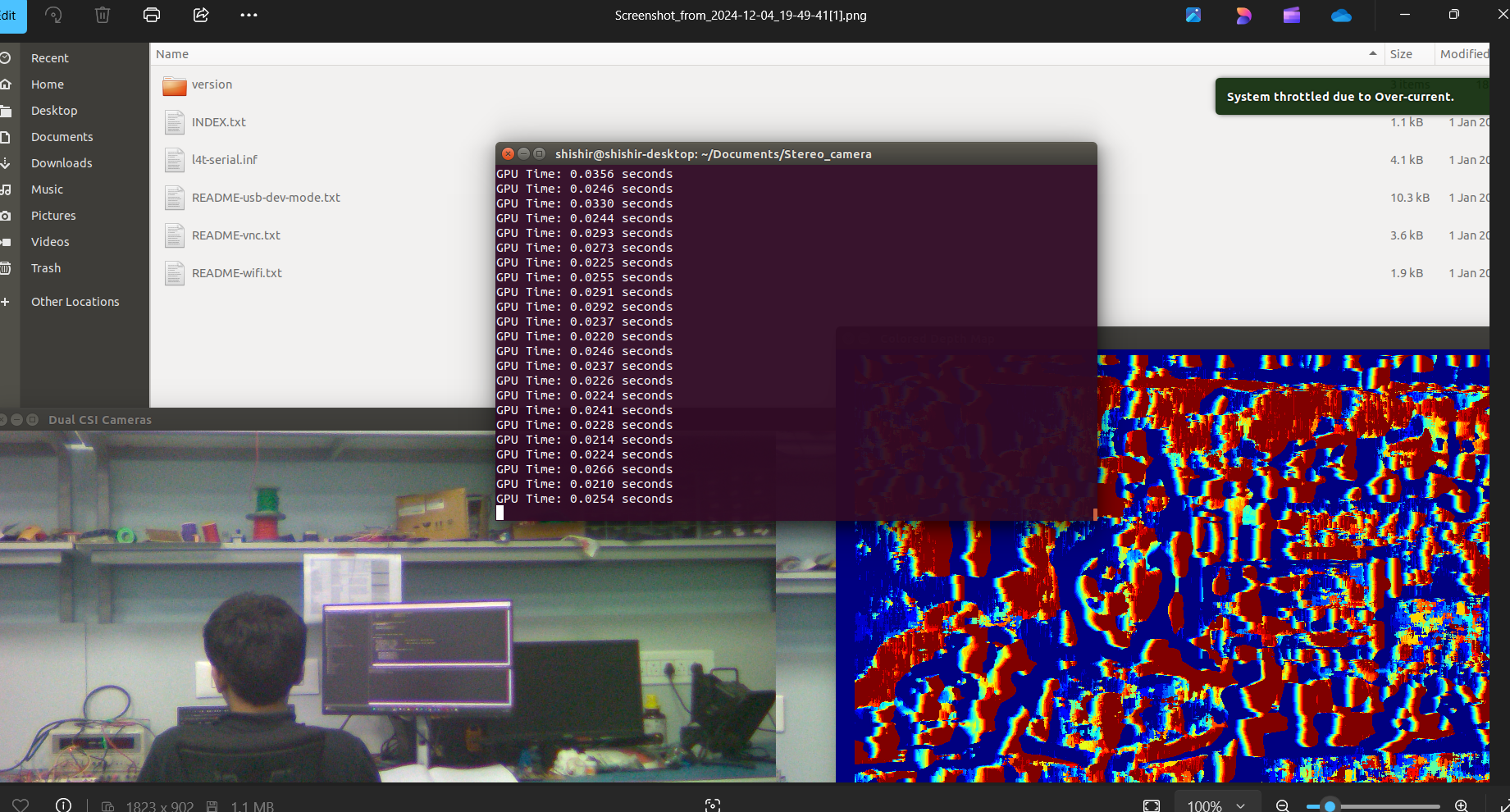

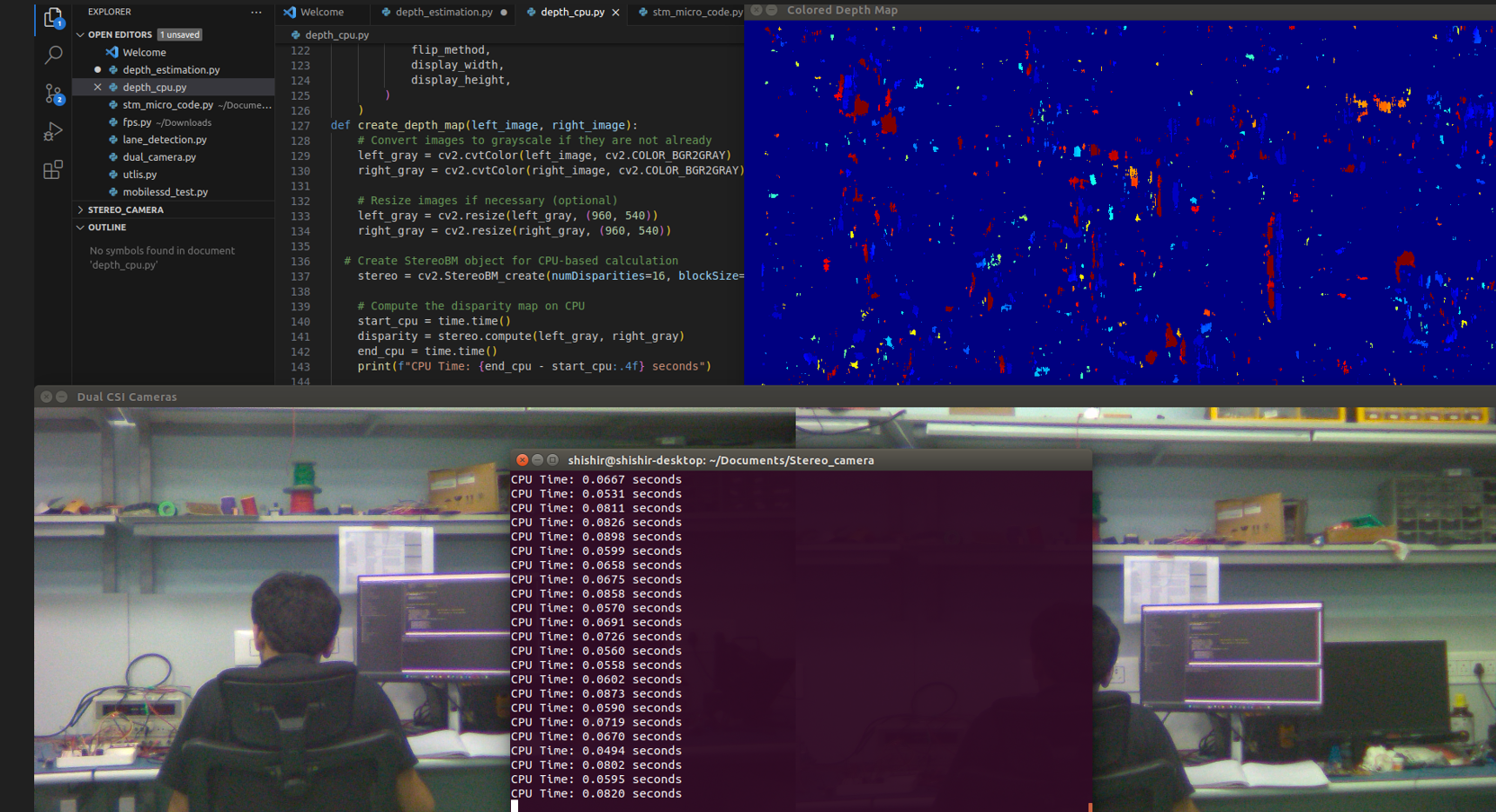

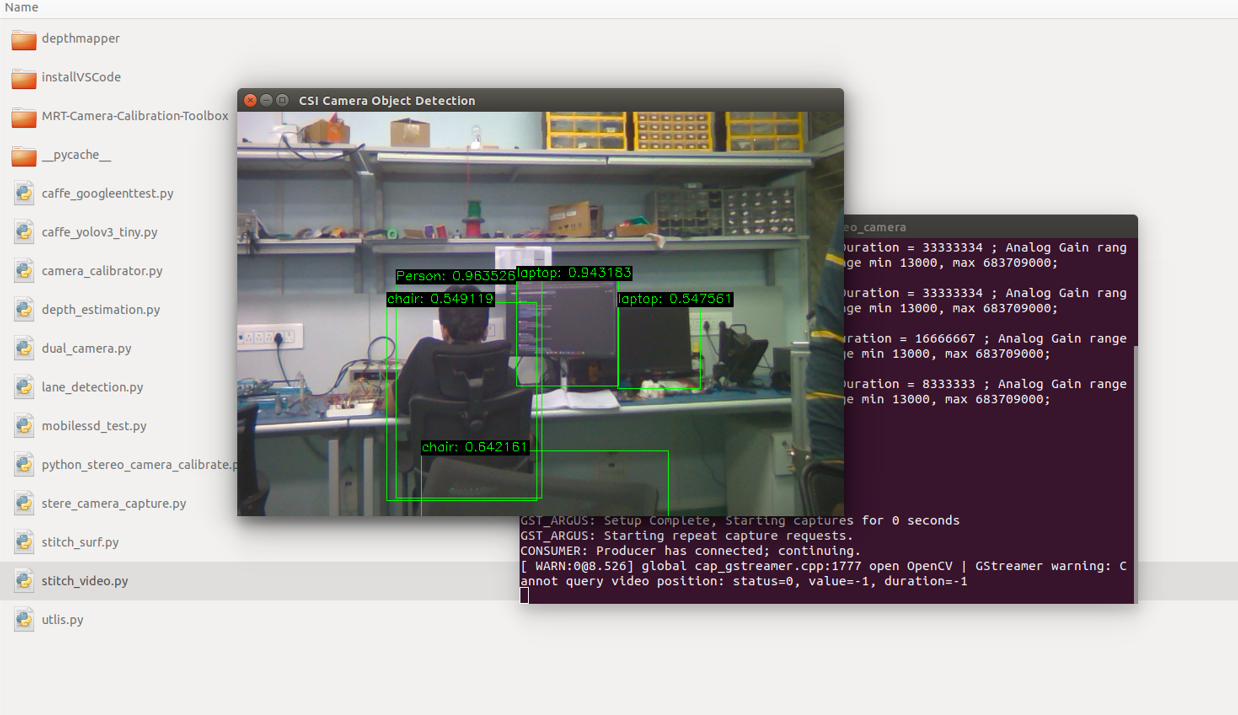

The Jetson nano pipeline is different than normal pi camera and due to different architecture it is capable of creating parallel pipleline. Example is in Dual camera.py. The pipeline construction is shown in figure 4 Parallel streams are then converted to disparity maps using CUDA GPU support. Camera colors need to be corrected as mentioned in https://www.waveshare.com/wiki/IMX219-83_Stereo_Camera. Finally to correct inherent distortions of the camera the camera needs to be calibrated details of which can be found in OpenCV documentation. Live disparity map is created and streamed using separate thread and snip of which is shown in figure 5. The GPU performance is almost 4x faster for stereo mapping and this is shown in figure 6and figure 7 The machine learning MobilenetSSD.caffemodel is trained with coco dataset and jetson nano is able to run this model at 120 frames seconds for a image size 720 x 480 images. The result of live detection is shown in the figure 8 Some libraries and packages cause interference with stack and cause the image pipeline to break so numpy 1.13 maintenance is important and $pip list command helps in checking and making it test every time. Cmake was also update to version 1.28 this also takes four to 5 hours and is necessary to run Open3D which is a good tool to visualize the stereo data

Figure 4: Gstreamer Pipeline( Correct Streamer Version is required)

Figure 5: Live disparity map

Figure 6: 6GPU computation time

Figure 7: CPU computation Time

Figure 8: Live detection Results

V FUTURE SCOPE :

The machine learning and depth-map threads could not be integrated together due to lack of time and can be taken up in future. The stereo camera framework can provide very accurate depth maps which can be used to control the vehicle by using this information in closed loop control of the motor. The lane detection model was tested with autonomous car but was found to be very sensitive to lighting conditions as only one camera feed was used, this can be improved by further using the stereo matched feeds which can provide robustness to the algorithm.

Recent Comments