Miniproject Objective

Construct the 3D Image by capturing images using 3D TOF inside the room and also to find the depth information of the image.

Overview of TOF Camera

The OPT8241 time-of-flight (ToF) sensor is used in the miniproject which combines ToF sensing with an optimally-designed analog-to-digital converter and a versatile programming timing generator. The device offers 320 X 240 resolution data at frame rates up to 150 frames per second. It provides the complete depth map of a scene. The OPT8241 ToF sensor, along with OPT9221 ToF controller, forms a two-chip solution for creating 3D camera. In ToF camera, the transmitter consists of an illumination block that illuminates the region of interest with modulated light and the sensor consist of a pixel array that collects light from the same region of interest. The round-trip time from the transmitter to the receiver is an indicator of the distance of the object that the signal bounced back from. If the signal is periodic, the phase difference between the transmitted and received signal is an indicator of the round-trip time.

Required libraries

PCL-1.7.2 libusb-1.0-0-dev libudev-dev linux-libc-dev CMake 2.3.13 Git G++ 4.8 or later

libusb-1.0-0-dev, libudev-dev, linux-libc-dev are the pre-requisites necessary for accessing usb data,accessing device information for Voxel-SDK

CMake,Git and G++ are required to compile Voxel-SDK from source

Experimental Setup

In Linux, we are getting raw data which has to be processed further by adding filters to get a clean image. but we encountered some problems constructing surfaces.

Results

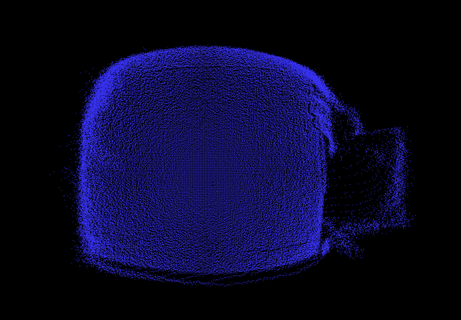

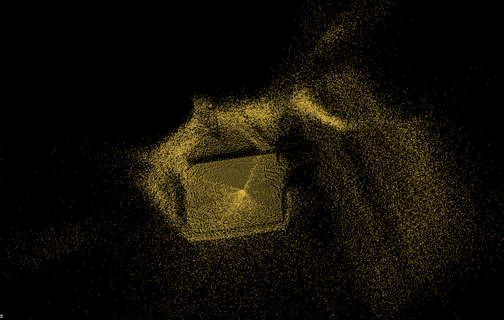

An object is kept at two different distances from camera and point cloud images are plotted in PCD viewer as shown in figures

Fig.1 Object at near distance

Fig.2 Object at far distance

Point cloud data(.vxl) of 5 frames is converted to binary format and the depth information at the center of the object of 4×4 pixels are taken and averaged. For the object at near distance,the average depth obtained is 0.42m and for the object at the far distance, depth is found to be 0.58m.

References

- Time-of-Flight Camera – An Introduction – http://www.ti.com/lit/sloa190

- Introduction to the Time-of-Flight(ToF) System Design – http://www.ti.com/lit/sbau219

- Voxel-SDK and associated libraries – https://github.com/3dtof/voxelsdk/wiki

- The list of tutorials in git repository – http://pointclouds.org/documentation/tutorials/

Future Scope

Point clouds at different angles are availed and image stitching algorithms are performed to make a single point cloud image.

Team Members

- Marem Raja Sekhar

- Rapolu Shivanand

Recent Comments